Let’s first understand the basic concepts and differences between LLM (Large Language Models) and SLM (Statistical Language Models).LLM v/s SLM

LLMs are really big and smart because they have a huge number of knowledge inside them, sometimes trillions of knowledge models! This makes them really good at understanding different languages and what people mean when they talk or write.

Small Language Models (SLMs) are like the little siblings of LLMs. They have fewer knowledge, maybe billions knowledge models, so they’re simpler and not as big. SLMs learn from specific types of information, which makes them good at certain things but not as smart about a wide range of topics.

The cost to make an LLM can be millions of dollars, which is a lot of money. But SLMs are a more budget-friendly choice for companies that want to use AI without spending too much.

Take chatGPT as an example of a Large Language Model (LLM), who knows a little bit about mostly everything, from science to art which gets better over time.

Now, think about when you’re texting on your phone and it guesses your next word—that’s a Statistical Language Model (SLM) at work. It’s smart enough to learn from what you usually type and gets better at predicting over time.

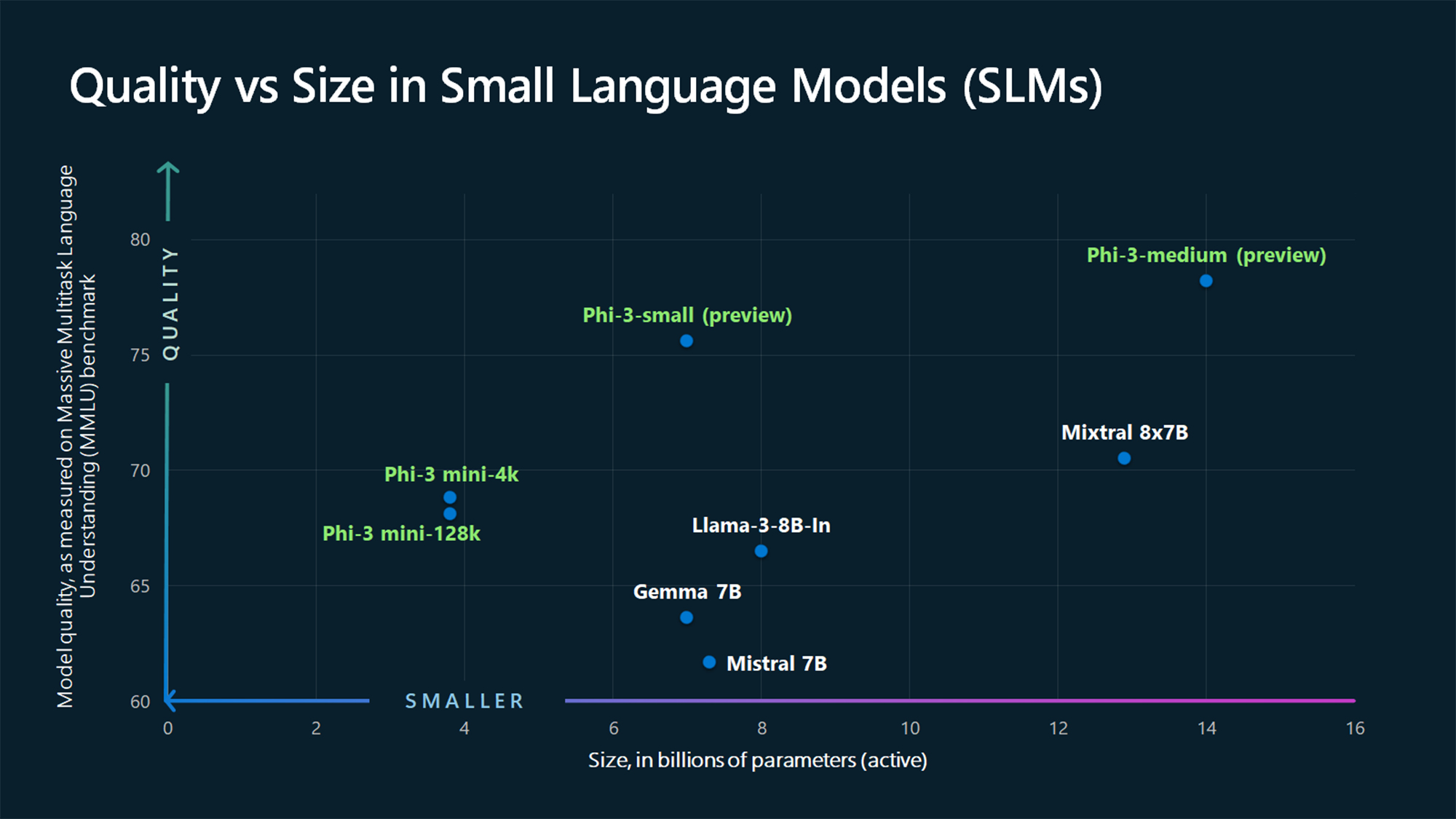

Microsoft has introduced the Phi-3 series, a suite of open AI models renowned for their exceptional capabilities and cost-efficiency. These small language models (SLMs) surpass their counterparts in size and performance across a variety of benchmarks, including language processing, logical reasoning, coding, and mathematics.Microsoft Phi-3 series: Tiny but Mighty

The Phi-3 Family

Phi-3-mini: This model boasts 3.8 billion parameters and is available in two context-length variants—4K and 128K tokens. It stands out as the first of its kind to support such an extensive context window.Phi-3-small: With 7 billion parameters, this model is a step up in complexity and capability.

Phi-3-medium: At 14 billion parameters, this model offers even more advanced processing power.

Accessibility and Usage: The Phi-3-mini model is particularly noteworthy as it is freely accessible for both personal and commercial use. Users are encouraged to explore the documentation and consider developing their own AI/ML applications.

Design and Safety: Safety is a cornerstone of the Phi-3 design, ensuring that the models are ready for immediate use and can interpret a wide range of instructions that mirror natural human communication.

Platform Availability: Developers can find Phi-3 models on various platforms, including Microsoft Azure AI Studio, Hugging Face, and Ollama. This multi-platform availability allows for easy local runs or deployment as needed.

Optimisation and Support: The models are fine-tuned for ONNX Runtime and are compatible with Windows DirectML. They offer cross-platform support for GPUs, CPUs, and mobile hardware, and can be integrated as NVIDIA NIM microservices.

Mobile Deployment: An innovative feature of the Phi-3 models is their ability to be quantized to 4 bits, optimizing them for mobile device deployment. This reduces memory usage, making them suitable for devices with limited resources.

Architecture and Techniques: The Phi-3-mini model, in particular, is designed with a specialized architecture that excels in language tasks. It leverages advanced techniques such as attention mechanisms and transformer networks to deliver precise and swift performance.

By focusing on these key aspects, the Phi-3 series stands as a versatile and powerful toolset for developers looking to harness the power of AI in their projects.

I’m curious to hear your thoughts! Where do you envision applying Phi-3 in your life?. Please share your ideas in the comments below.

That wraps up our update for today. Farewell, and stay tuned for more exciting developments!